10 Docker recreates the same environment for your code in any machine

You can think of Docker as running a separate OS (not precisely, but close enough) called containers on a machine.

Docker provides the ability to replicate the OS and its packages (e.g., Python modules) across machines, so you don’t encounter “hey, that worked on my computer” type issues.

10.1 A Docker image is a blueprint for your container

An image is a blueprint for creating your Docker container. In an image, you can define the modules to install, variables to set, etc, and then use the image to create multiple containers.

Let’s consider our Airflow Dockerfile:

FROM apache/airflow:2.9.2

RUN pip install uv

COPY requirements.txt $AIRFLOW_HOME

RUN uv pip install -r $AIRFLOW_HOME/requirements.txt

COPY run_ddl.py $AIRFLOW_HOME

COPY generate_data.py $AIRFLOW_HOME

User root

RUN apt-get update && \

apt-get install -y --no-install-recommends \

default-jdk

RUN curl https://archive.apache.org/dist/spark/spark-3.5.1/spark-3.5.1-bin-hadoop3.tgz -o spark-3.5.1-bin-hadoop3.tgz

# Change permissions of the downloaded tarball

RUN chmod 755 spark-3.5.1-bin-hadoop3.tgz

# Create the target directory and extract the tarball to it

RUN mkdir -p /opt/spark && tar xvzf spark-3.5.1-bin-hadoop3.tgz --directory /opt/spark --strip-components=1

ENV JAVA_HOME='/usr/lib/jvm/java-17-openjdk-amd64'

ENV PATH=$PATH:$JAVA_HOME/bin

ENV SPARK_HOME='/opt/spark'

ENV PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbinThe commands in the Docker image are run in order. Let’s go over the key commands:

FROM: We need a base operating system on which to set our configurations. We can also utilize existing Docker images available on Docker Hub (an online store where people can upload and download images from) and build upon them. In our example, we use the official Airflow Docker image.COPY: Copy is used to copy files or folders from our local filesystem to the image. In our image, we copy the Python requirements.txt file, data generation scripts, and table creation (DDL) scripts.ENV: This command sets the image’s environment variables. In our example, we put the Java and Spark Paths necessary to run Spark inside our container.ENTRYPOINT: The entrypoint command executes a script when the image starts. In our example, we don’t use one. Still, it is a common practice to have a script start necessary programs using an entry point script.

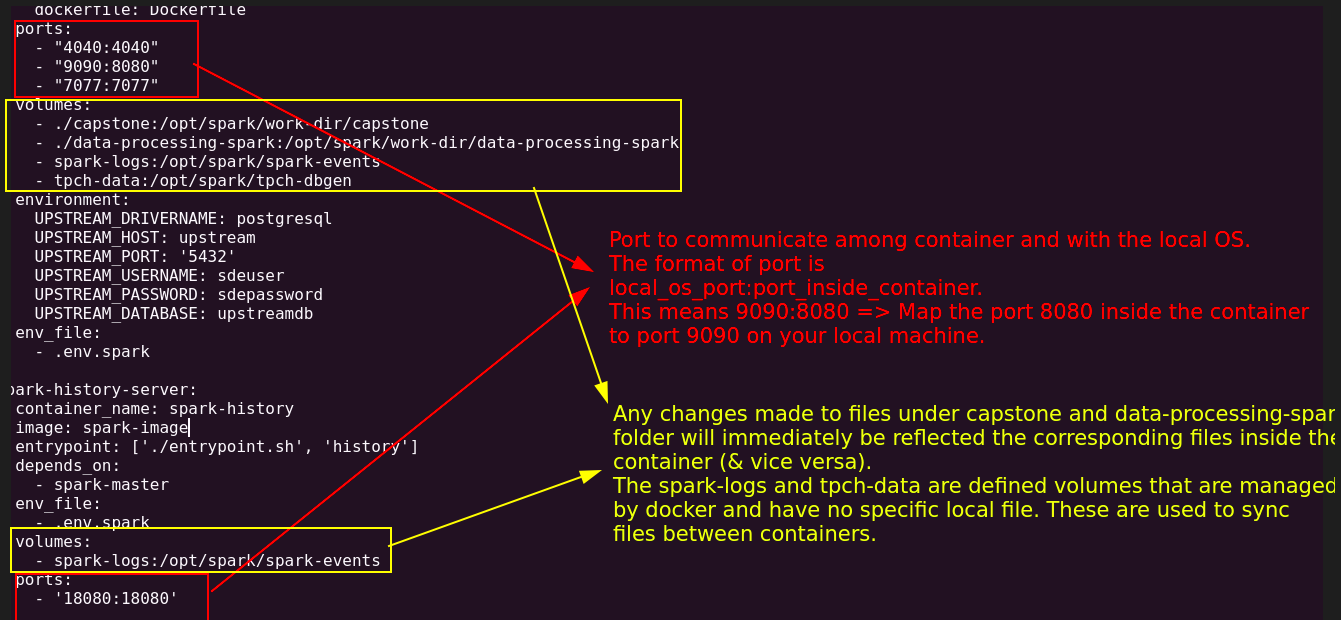

10.2 Sync data & code between a container and your local filesystem with volume mounts

When we are developing, we’d want to make changes to the code and see its impact immediately. While you can use COPY to copy your code when building a Docker image, it will not reflect changes in real-time, and you will have to rebuild your container each time you need to change your code.

In cases where you want data/code to sync two ways between your local machine and the running Docker container, use mounted volumes.

In addition to syncing local files, volumes can also sync files between running containers.

In our docker-compose.yml (which we will go over below), we mount the following folders in our local filesystem into a specified path inside a container.

volumes:

- ./dags:/opt/airflow/dags

- ./data:/opt/airflow/data

- ./visualization:/opt/airflow/visualization

- ./logs:/opt/airflow/logs

- ./plugins:/opt/airflow/plugins

- ./tests:/opt/airflow/tests

- ./temp:/opt/airflow/temp

- ./tpch_analytics:/opt/airflow/tpch_analytics10.3 Ports to accept connections

Most data systems also expose runtime information, documentation, UI, and other components via ports. We have to inform Docker which ports to keep open so that they are accessible from the “outside”, in our case, your local browser.

In our docker-compose.yml (add: link)(which we will go over below), we keep the following port open

ports:

- 8080:8080

- 8081:8081The 8080 port is for the Airflow UI, and 8081 is for the dbt docs.

Note In - 8080:8080, the RHS (right-hand side) 8080 represents the port inside the container, and the LHS (left hand side) 8080 indicates the port that the internal one maps to on your local machine.

Shown below is another example of how ports and volumes enable communication and data sharing respectively across containers and your os:

10.4 Docker cli to start a container and docker compose to coordinate starting multiple containers

We can use the docker cli to start containers based on an image. Let’s look at an example. To start a simple metabase dashboard container, we can use the following:

docker run -d --name dashboard -p 3000:3000 metabase/metabaseThe docker command will look for containers on your local machine and then in docker hub matching the name metabase/metabase.

However, with most data systems, we will need to ensure multiple systems are running. While we can use the docker CLI to do this, a better option is to use docker compose to orchestrate the different containers required.

With docker compose, we can define all our settings in one file and ensure that they are started in the order we prefer.

With our docker-compose.yml defined, starting our containers is a simple command, as shown below:

docker compose up -dThe command will, by default, look for a file called docker-compose.yml in the directory in which the command is run.

Let’s take a look at our docker-compose.yml file.

We have six services (collection of one or more containers):

Postgresto serve as the backend for our AirflowAirflow Webserverfor the Airflow UIAirflow Schedulerto schedule and run our Airflow jobsAirflow initis a short-lived container that creates all the PostgreSQL tables used to store run information by the Airflow systemMinioto serve as a local open-source S3 alternative.

Since all our Airflow-based services need to have standard settings, we define a x-airflow-common: at the top with settings that are injected into the necessary services as such

airflow-webserver:

<<: *airflow-commonOur docker-compose.yml file:

version: '3'

x-airflow-common:

&airflow-common

build:

context: ./containers/airflow/

environment:

&airflow-common-env

AIRFLOW__CORE__EXECUTOR: LocalExecutor

AIRFLOW__CORE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

AIRFLOW__CORE__FERNET_KEY: ''

AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION: 'true'

AIRFLOW__CORE__LOAD_EXAMPLES: 'false'

AIRFLOW__API__AUTH_BACKEND: 'airflow.api.auth.backend.basic_auth'

AIRFLOW_CONN_POSTGRES_DEFAULT: postgres://airflow:airflow@postgres:5432/airflow

volumes:

- ./dags:/opt/airflow/dags

- ./data:/opt/airflow/data

- ./visualization:/opt/airflow/visualization

- ./logs:/opt/airflow/logs

- ./plugins:/opt/airflow/plugins

- ./tests:/opt/airflow/tests

- ./temp:/opt/airflow/temp

- ./tpch_analytics:/opt/airflow/tpch_analytics

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-50000}"

depends_on:

postgres:

condition: service_healthy

services:

postgres:

container_name: postgres

image: postgres:16

environment:

POSTGRES_USER: airflow

POSTGRES_PASSWORD: airflow

POSTGRES_DB: airflow

volumes:

- ./data:/input_data

healthcheck:

test: [ "CMD", "pg_isready", "-U", "airflow" ]

interval: 5s

retries: 5

restart: always

ports:

- "5432:5432"

airflow-webserver:

<<: *airflow-common

container_name: webserver

command: webserver

ports:

- 8080:8080

- 8081:8081

healthcheck:

test:

[

"CMD",

"curl",

"--fail",

"http://localhost:8080/health"

]

interval: 10s

timeout: 10s

retries: 5

restart: always

airflow-scheduler:

<<: *airflow-common

container_name: scheduler

command: scheduler

ports:

- 10000:10000

healthcheck:

test:

[

"CMD-SHELL",

'airflow jobs check --job-type SchedulerJob --hostname "$${HOSTNAME}"'

]

interval: 10s

timeout: 10s

retries: 5

restart: always

airflow-init:

<<: *airflow-common

command: version

environment:

<<: *airflow-common-env

_AIRFLOW_DB_UPGRADE: 'true'

_AIRFLOW_WWW_USER_CREATE: 'true'

_AIRFLOW_WWW_USER_USERNAME: ${_AIRFLOW_WWW_USER_USERNAME:-airflow}

_AIRFLOW_WWW_USER_PASSWORD: ${_AIRFLOW_WWW_USER_PASSWORD:-airflow}

minio:

image: 'minio/minio:latest'

hostname: minio

container_name: minio

ports:

- '9000:9000'

- '9001:9001'

environment:

MINIO_ACCESS_KEY: minio

MINIO_SECRET_KEY: minio123

MINIO_ROOT_USER: minio

MINIO_ROOT_PASSWORD: minio123

command: server --console-address ":9001" /data10.5 Executing commands in your Docker container

Using the exec command, you can submit commands to be run in a specific container. For example, we can use the following to open a bash terminal in our scheduler container. Note that the scheduler is based on the container_name setting.

docker exec -ti scheduler bash

# You will be in the master container bash shell

# try some commands

pwd

exit # exit the containerNote that the -ti indicates that this will be run in an interactive mode. As shown below, we can run a command in non-interactive mode and obtain an output.

docker exec scheduler echo hello

# prints hello