import requests

url = "https://pokeapi.co/api/v2/pokemon/1"

response = requests.get(url)

print(response.json())5 Python has libraries to read and write data to (almost) any system

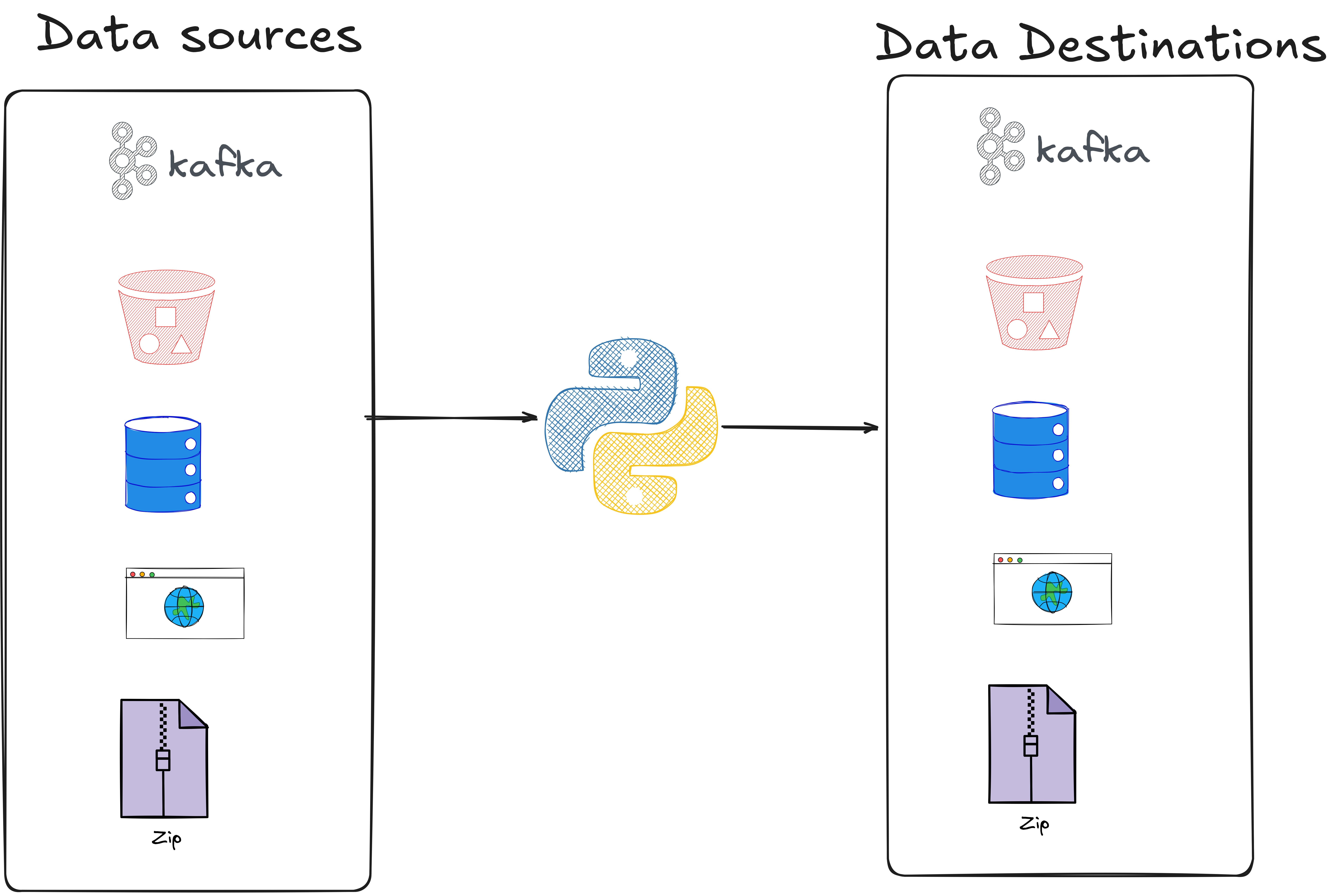

Python has multiple libraries that enable reading from and writing to various systems. Almost all systems these days have a Python libraries to interact with it.

For data engineering this means that one can use Python to interact with any part of the stack. Let’s look at the types of systems for reading and writing and how Python is used there:

Database drivers: These are libraries that you can use to connect to a database. Database drivers require you to use credentials to create a connection to your database. Once you have the connection object, you can run queries, read data from your database in Python, etc. Some examples are psycopg2, sqlite3, duckdb, etc.Cloud SDKs: Most cloud providers (AWS, GCP, Azure) provide their own SDK(Software Development Kit). You can use the SDK to work with any of the cloud services. In data pipelines, you would typically use the SDK to extract/load data from/to a cloud storage system(S3, GCP Cloud store, etc). Some examples of SDK are AWS, which has boto3; GCP, which has gsutil; etc.APIs: Some systems expose data via APIs. Essentially, a server will accept an HTTPS request and return some data based on the parameters. Python has the popularrequestslibrary to work with APIs.Files: Python enables you to read/write data into files with standard libraries(e.g.,csv). Python has a plethora of libraries available for specialized files like XML, xlsx, parquet, etc.SFTP/FTP: These are servers typically used to provide data to clients outside your company. Python has tools like paramiko, ftplib, etc., to access the data on these servers.Queuing systems: These are systems that queue data (e.g., Kafka, AWS Kinesis, Redpanda, etc.). Python has libraries to read data from and write data to these systems, e.g., pykafka, etc.

Read data from the pokemon api https://pokeapi.co/api/v2/pokemon/1 using requests library. Use the get method. Documentation reference

Read data from a local file ./data/customer.csv using the open function and csv reader.

import csv

data_location = "./data/customer.csv"

with open(data_location, "r", newline="") as csvfile:

csvreader = csv.reader(csvfile)

next(csvreader) # Skip header row

for row in csvreader:

print(row)

breakUse the BeautifulSoup library to parse the html data from the url https://example.com and find all the anchor html tags and print the hrefs.

import requests

from bs4 import BeautifulSoup

url = 'https://example.com'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

for link in soup.find_all('a'):

print(link.get('href'))5.1 Exercises

- Fetch Data from API and Save Locally. Pull Pokemon data from the PokeAPI and save it to a local file. The local JSON file should contain Pokemon data for Bulbasaur (ID: 1)

import requests

import json

data_api = "https://pokeapi.co/api/v2/pokemon/1/"

local_file = "pokemon_data.json"

# TODO:

# 1. Make a GET request to data_api;

# Ref docs: https://docs.python-requests.org/en/latest/user/quickstart/#response-content

# 2. Extract the JSON response

# Ref docs: https://docs.python-requests.org/en/latest/user/quickstart/#json-response-content

# 3. Write the JSON data to local_file

# Open a file writer

# Ref docs: https://docs.python.org/3/tutorial/inputoutput.html#methods-of-file-objects and how to

# Save json into the open file writer

# Ref docs https://docs.python.org/3/tutorial/inputoutput.html#saving-structured-data-with-json- Read and Display Local File Contents. Read the previously saved JSON file and print its name and id.

# TODO:

# 1. Use Python standard libraries to open local_file

# Ref docs: https://docs.python.org/3/tutorial/inputoutput.html#methods-of-file-objects

# 2. Use json.load to convert it into a json

# Ref docs: https://docs.python.org/3/library/json.html#json.load

# 3. Read the name and id from the json, as you would from a dictionary- Parse Data and Insert into SQLite Database. Extract specific Pokemon attributes and store them in a SQLite database.

Table Schema:

CREATE TABLE pokemon (

id INTEGER PRIMARY KEY,

name TEXT NOT NULL,

base_experience INTEGER

);import sqlite3

import json

local_file = "pokemon_data.json"

database_file = "pokemon.db"

# Open a sqlite3 connection

conn = sqlite3.connect(database_file)

cursor = conn.cursor()

# make sure to commit after each interaction (that modifies our database table) https://docs.python.org/3/library/sqlite3.html#sqlite3.Connection.commit

# TODO:

# 1. Create a SQLite3 table with columns: id, name, base_experience

# Ref docs: https://docs.python.org/3/library/sqlite3.html#sqlite3.Cursor.execute

# 2. Open and read the local_file using Python standard libraries

# 3. Parse the JSON data to extract id, name, and base_experience

# 4. Insert the extracted data into the SQLite table;

"""

cursor.execute('''

INSERT INTO pokemon (id, name, base_experience)

VALUES (?, ?, ?)

''', (pokemon_id, pokemon_name, base_experience))

"""

# 5. Verify insertion by querying the pokemon table and printing the data

# Ref docs: https://docs.python.org/3/library/sqlite3.html#sqlite3.Cursor.fetchall